Best VPN Services in 2025: Secure Your Online World

Discover the best VPN services of 2025 for privacy, speed, streaming, and security. Compare top VPNs like ExpressVPN, NordVPN, and Surfshark to protect your online activity.

Discover how chatbots work behind the scenes with large language models, transformers, and AI training. A deep dive into the tech powering modern AI.

In recent years, chatbots have evolved from simple rule-based scripts to complex, near-human conversational agents. The secret behind this leap in capability? Large Language Models (LLMs). Whether you're chatting with a customer support bot or experimenting with AI tools like ChatGPT, you're witnessing the power of LLMs in action. But how exactly do these systems work under the hood?

In this blog, we’ll peel back the layers of abstraction to explore the intricate machinery behind modern chatbots powered by large language models.

Table of contents [Show]

Before diving into LLMs, it’s worth understanding how chatbot technology has progressed.

This generative capability is enabled by a specific kind of neural network architecture: the Transformer.

Introduced in the 2017 paper "Attention Is All You Need", the Transformer architecture revolutionized natural language processing (NLP). Unlike RNNs or LSTMs, which process text sequentially, Transformers handle input in parallel, allowing for faster training and greater scalability.

At its core, a Transformer uses self-attention mechanisms to determine the relevance of each word in a sentence relative to others. This enables it to capture context over long passages — a crucial capability for generating coherent, context-aware responses.

For example, in the sentence:

"The trophy doesn't fit in the suitcase because it's too small."

A Transformer-based model can track that "small" likely refers to the suitcase, not the trophy — a nuanced inference that stumps many older models.

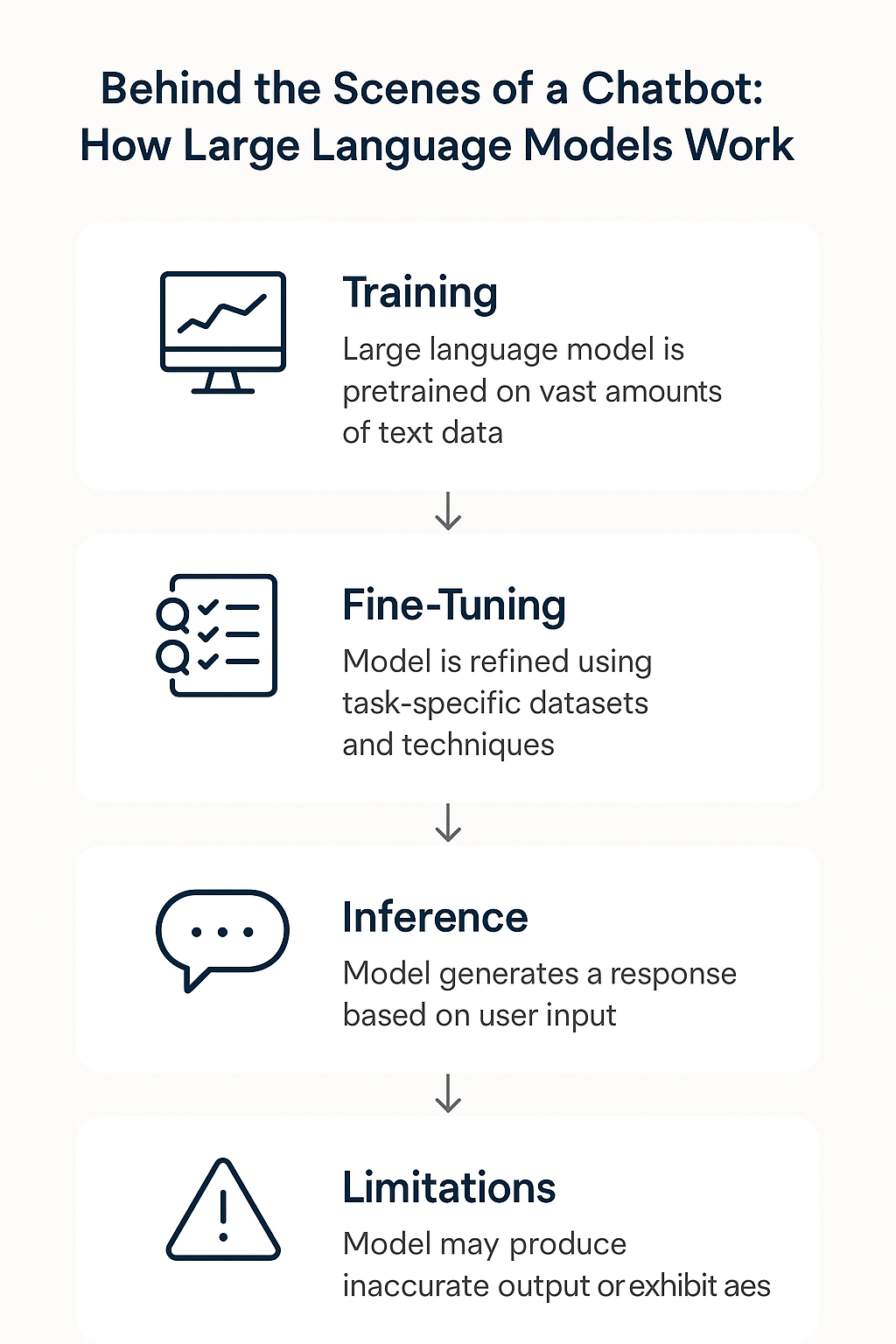

Training a large language model is a massive undertaking. Models like GPT-4 are trained on hundreds of billions of words pulled from books, websites, forums, and other publicly available sources. This pretraining phase involves:

This process can require thousands of GPUs running for weeks or months, costing millions of dollars in compute resources.

Once pretrained, an LLM is a powerful but raw tool. To make it helpful and safe, developers fine-tune it using various methods:

The result is a chatbot that not only generates plausible text but does so in ways that align with human values, expectations, and safety guidelines.

Once trained and deployed, the chatbot engages in inference — the process of generating responses on the fly.

Here’s a simplified version of what happens when you ask a chatbot a question:

While this process seems lengthy, optimizations and dedicated hardware allow it to occur in milliseconds.

Despite their capabilities, LLM-powered chatbots have limitations:

These issues are the focus of ongoing research in AI safety, interpretability, and fairness.

LLMs are quickly expanding beyond text:

The frontier of LLM development is shifting from understanding language to reasoning, planning, and acting in the world.

Behind the conversational ease of a chatbot lies a symphony of algorithms, data, and engineering. Large language models are not just statistical parrots — they are emergent systems capable of surprisingly rich understanding and generation.

As we continue to unlock their capabilities and understand their limitations, the next generation of AI-powered agents will be even more capable — and even more deeply woven into the fabric of how we communicate, learn, and create.

Author’s Note:

Interested in diving deeper? Check out the original Transformer paper, or explore the latest open-weight models like Meta’s LLaMA or Mistral’s Mixtral to get hands-on with LLMs.

Discover the best VPN services of 2025 for privacy, speed, streaming, and security. Compare top VPNs like ExpressVPN, NordVPN, and Surfshark to protect your online activity.

Can machines ever generate hypotheses, conduct experiments, and revise theories like human scientists? With AI evolving rapidly, we may be closer than we think.

Master advanced text generation with the OpenAI API. Learn expert prompt engineering, temperature control, function calling, and real-world GPT-4 use cases to build smarter AI applications.